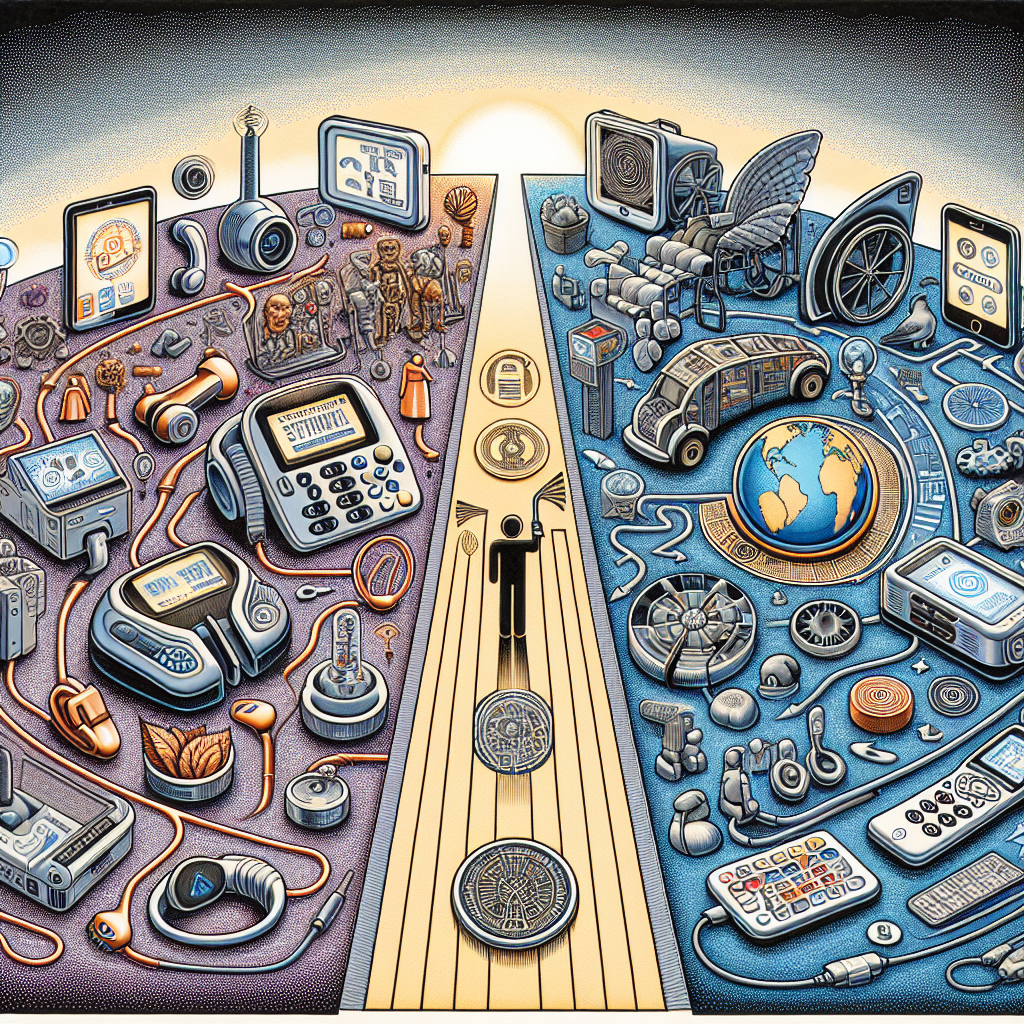

Sign language is a rich form of communication used by the deaf and hard-of-hearing communities globally. It serves as a vital tool for expression, understanding, and interaction. As technology progresses, new forms of sign language interpretation are emerging, promising significant improvements in accessibility and comprehension for users around the world. Such technologies aim to bridge the communication gap between sign language users and non-users, fostering inclusivity and equal opportunities. This article explores the journey of these technologies and how they evolve towards understanding and implementing effective solutions for sign language interpretation. By examining various technologies, we delve into innovative sign language translation tools, machine learning advancements, and real-time interpretation devices that collectively revolutionize the landscape of communication for the deaf community. Through understanding, we can appreciate the impact of these technologies and identify challenges needing attention as we continue to strive for a more inclusive society. Technologies like gloves, AI-driven software, and videophones showcase fascinating developments while presenting unique solutions for contemporary communication barriers.

The significance of these technological solutions is multi-faceted, impacting education, employment, social interaction, and even healthcare for deaf individuals. Interpreters, both human and automated, play a crucial role in everyday transactions, from classrooms and workplaces to medical appointments and public services. Understanding how emerging tech aids in overcoming challenges faced by sign language users is key to appreciating the social and cultural ramifications of these advancements. Furthermore, such developments highlight important conversations surrounding technology, accessibility, and the ethics involved in bridging communication divides.

Wearable Devices: Revolutionizing How We Interpret

One of the most innovative advancements in sign language interpretation is the development of wearable devices specifically intended to aid in translating sign language into spoken or written language, and vice versa. These devices often utilize a combination of specialized sensors, AI algorithms, and data processing capabilities to recognize and interpret gestures performed by sign language users. Electronic gloves, for instance, are designed with embedded sensors that detect and track hand movements. By interpreting these signals, the gloves can translate gestures into text or speech, offering unprecedented levels of accessibility for deaf individuals. Moreover, these wearable devices provide opportunities for real-time translation, reducing the time delays often associated with manual interpretation.

Another emerging wearable technology is smart glasses. These glasses showcase real-time subtitles for spoken language directly into the field of vision of the wearer. By incorporating live captioning features, they allow users to participate in conversations without relying solely on sign language. Smart glass technology has potential applications in various environments, such as classrooms, conferences, and public events, providing a seamless and inclusive communication experience. However, challenges such as battery life, the need for internet connectivity, and design considerations to make them aesthetically acceptable are areas that require further attention and innovation.

Artificial Intelligence: Pioneering Real-Time Interpretation

Artificial Intelligence (AI) is at the forefront of advancing sign language interpretation technology. Machine learning algorithms are instrumental in developing more accurate and efficient translation systems. Through extensive data training, these algorithms learn to recognize complex hand gestures and facial expressions that form essential components of sign language. AI-driven software can convert these gestures into voice or text, making communication with non-sign language users easier and faster. One notable application is the use of convolutional neural networks (CNNs) to process images of hand gestures. CNNs can analyze movement and positioning to ascertain meaning, providing the basis for programmable translation models.

In addition to gesture recognition, AI technologies are making strides in advancing conversational AI systems that are capable of understanding context, nuance, and regional sign language dialects. This is important because sign languages are not universal; for instance, American Sign Language (ASL) and British Sign Language (BSL) have distinct differences in form and structure. AI algorithms can be trained to recognize these variations, ensuring that communication is precise and context-appropriate. The development of such systems faces challenges, including the need for extensive datasets to train models effectively and the possibility of misinterpretation due to limitations in capturing complex human emotions and subtleties.

Machine Learning and Natural Language Processing

Machine learning and natural language processing (NLP) provide the backbone for intelligent systems capable of real-time sign language interpretation. These technologies enable the creation of more nuanced translation models that consider syntax, grammar, and semantics of sign language. Researchers are continually developing algorithms that comprehend the flow and structure of sign languages, thus making machine translation as accurate as possible. Natural language processing helps in analyzing the context of conversations, facilitating a smoother interaction between sign language users and their counterparts. Combining machine learning models with NLP techniques offers robust solutions to the challenges of translating not just signs, but the contextual layers of communication that accompany every conversation.

The integration of NLP with sign language processing tools gives rise to applications such as virtual assistants capable of interpreting sign language commands. These virtual assistants, powered by machine learning algorithms, can understand specific instructions delivered through sign language, offering both practicality and innovation. The challenge lies in the diversity of sign language and the need to develop comprehensive training datasets that reflect the varied linguistic makeup of users worldwide. Engineers and linguists must work collaboratively to refine models for better accuracy and understanding, which will result in meaningful interactions supported by technology.

Videophone Technology: Enhancing Communication Platforms

Video relay services (VRS) and videophones have become widely used communication devices that cater to the deaf community by facilitating calls between hearing and non-hearing individuals. Unlike traditional phones, videophones enable users to conduct conversations using sign language with the aid of interpreters. This technology is continuously evolving, with newer models offering high-definition video quality and reduced latency, resulting in clearer communication. Videophones and VRS platforms must prioritize user-friendly interfaces, accessibility features, and compatibility with existing telecommunication infrastructure to ensure widespread adoption.

Furthermore, innovations in video compression algorithms and bandwidth optimization contribute to the efficiency and reliability of videophone technology. By reducing data usage and improving video transmission speeds, sign language users can benefit from uninterrupted interactions. VRS centers rely on skilled interpreters to facilitate communication but also on technology that can recognize sign language automatically. Integrating AI and machine learning capabilities into these platforms could potentially reduce the reliance on human interpreters, providing deaf individuals with an autonomous communication solution even in remote or underserved areas.

Gesture Recognition and Holographic Interpretation

Recent advancements in gesture recognition and holographic technology present exciting opportunities for the future of sign language interpretation. Gesture recognition involves using sensors and cameras to capture the movement and position of the hands and body. By applying sophisticated algorithms, systems can decode gestures and convert them into spoken words or text. The use of multiple sensors, such as depth cameras, ensures accurate capture across various lighting conditions and environments.

Holographic interpretation is another promising technology, projecting three-dimensional images to create realistic signing avatars. This approach can simulate human interactions and facilitate remote communication with a high level of immersion. Holograms can act as dynamic interpreters, conducting language translation or teaching sign language to learners. While still in its infancy, holographic technology possesses the potential to revolutionize sign language accessibility. Efforts toward improving capture resolution, reducing cost, and enhancing portability will enable broader usage and a greater reach for these technologies.

Conclusion

The landscape of sign language interpretation is rapidly evolving, powered by a host of emerging technologies that promise to reshape how deaf individuals communicate in their daily lives. From wearable devices and AI-powered solutions to machine learning and videophone advancements, these technologies are pushing the boundaries of what is possible in sign language translation. They not only provide essential services that enable sign language users to interact more easily with the hearing world but also pave the path toward a more inclusive society where communication barriers are significantly reduced.

However, these endeavors are not without their challenges. Compatibility, privacy concerns, cost, and the need for large, diverse datasets to train models effectively all play crucial roles in shaping the future of these technologies. Developers, researchers, and advocates must work together, addressing these issues responsibly while maintaining focus on enhancing accessibility and inclusion for the deaf and hard-of-hearing communities globally. The intersection of technology and translation marks significant progress in ensuring people can communicate freely, overcoming obstacles that have historically hindered social interactions.

Moving forward, the continuing integration of emerging technologies into sign language interpretation will require thoughtful consideration of linguistic and cultural aspects unique to this form of communication. As these technologies mature, they hold immense potential to empower deaf individuals, offering them unprecedented autonomy and connection to the broader world. As advancements continue, it is imperative to nurture dialogue around ethics, implementation, and the authentic representation of sign language within technological frameworks. Realizing these aims demands a collective effort—championing innovation, promoting awareness, and fostering appreciation for the diversity of human communication.

Frequently Asked Questions

1. What are the emerging technologies in sign language interpretation?

Emerging technologies in sign language interpretation are innovative solutions designed to enhance the communication capabilities for those who use sign language. These technologies primarily include software applications, wearable devices, and advanced machine learning algorithms. One prominent technology is the development of smart gloves that can translate hand signs into spoken words. Equipped with sensors, these gloves capture movements and gestures, which are then converted into audio output, effectively bridging the gap between sign language users and non-users.

Additionally, AI-driven software has made significant strides in real-time video interpretation. These programs can analyze and translate sign language captured via camera almost instantly, allowing for smoother, more natural interactions. Furthermore, the usage of augmented reality (AR) and virtual reality (VR) platforms is beginning to play a role in creating immersive, engaging learning environments for teaching and acquiring sign language, which benefits both deaf individuals and those looking to communicate with them effectively.

These emerging technologies aim to foster inclusivity by breaking down communication barriers, thus offering sign language users more opportunities in both personal and professional contexts.

2. How do these technologies improve accessibility for sign language users?

Emerging sign language interpretation technologies dramatically enhance accessibility by facilitating real-time and accurate translations between sign language and spoken or written language. Traditional sign language interpretation can sometimes be limited by availability, requiring qualified interpreters to be present. Technologies, however, provide more immediate solutions. With wearable devices, for instance, users can independently communicate with others without needing an interpreter at every interaction.

Moreover, software applications and mobile apps offer access to vast sign language databases, allowing users to seamlessly switch between languages and dialects with ease. These solutions especially benefit those in regions where specific interpreters might be scarce, making communication more universal and inclusive.

The improved accuracy and speed afforded by these technologies also reduce potential misunderstandings, ensuring that educational, professional, and social settings are more accommodating to sign language users. This increase in accessibility is crucial for ensuring that people who are deaf or hard-of-hearing can participate fully in society, ultimately leading to better job opportunities, education, and social engagement.

3. What are the challenges faced by these new technologies?

While promising, emerging technologies in sign language interpretation face several challenges. Firstly, capturing the nuances and regional variations of sign language is complex. Sign language is not universal; there are over 300 different sign languages globally, each with its own grammar and syntax. Ensuring that technology adequately covers this diversity is an ongoing challenge.

Additionally, real-time translation requires significant computational power and sophisticated algorithms, particularly those relying on AI and machine learning. Developing algorithms that accurately and efficiently process sign language data in various lighting conditions and environmental settings poses a further challenge to developers.

An additional consideration is the need for user-friendly interfaces that cater to a range of skill levels, from tech-savvy to beginners, ensuring that everyone can benefit from new advancements. Cost and accessibility of the technology itself are also barriers, as high-tech equipment can be expensive and thus out of reach for many users in lower-income communities.

4. How do these technologies foster inclusivity?

Emerging sign language interpretation technologies foster inclusivity by breaking down communication barriers between sign language users and those unfamiliar with it. By providing tools that facilitate effective communication, these innovations help ensure that deaf and hard-of-hearing individuals can access the same opportunities as others.

In educational settings, for instance, tools such as AR-based sign language learning platforms allow for interactive learning experiences. These platforms help non-signers learn sign language more efficiently, promoting a culture of understanding and acceptance. In workplaces, communication tools integrated with video conferencing platforms can enable remote and hybrid job opportunities for deaf individuals, thus improving workplace diversity and inclusivity.

On a broader societal level, these technologies empower deaf and hard-of-hearing individuals to engage more efficiently in social activities, access public services, and participate in entertainment, thus enriching their personal and professional lives. By enabling smoother interactions across different communities, emerging technologies support a more inclusive society where language differences are no longer a barrier.

5. How can sign language users get involved in the development and evaluation of these technologies?

Sign language users can play a crucial role in the development and evaluation of sign language interpretation technologies by participating in user testing and feedback processes. Developers often seek collaboration with deaf communities and advocacy groups to ensure that the tools they create are not only technologically advanced but also meet the practical needs of users.

By joining these initiatives and providing feedback, sign language users can help shape features, usability aspects, and the real-world application of emerging technologies. Participation in beta testing and research projects organized by tech companies is another way for users to have direct input in how these technologies evolve.

Additionally, getting involved in advocacy groups focused on accessibility and technology can amplify users’ voices in larger conversations about accessibility standards and innovation. Engaging with developers and researchers through workshops, focus groups, or surveys also offers valuable insight that can drive technological improvements.

Ultimately, through active participation in these processes, sign language users can ensure that new technologies are effective, relevant, and aligned with their community’s values and needs.